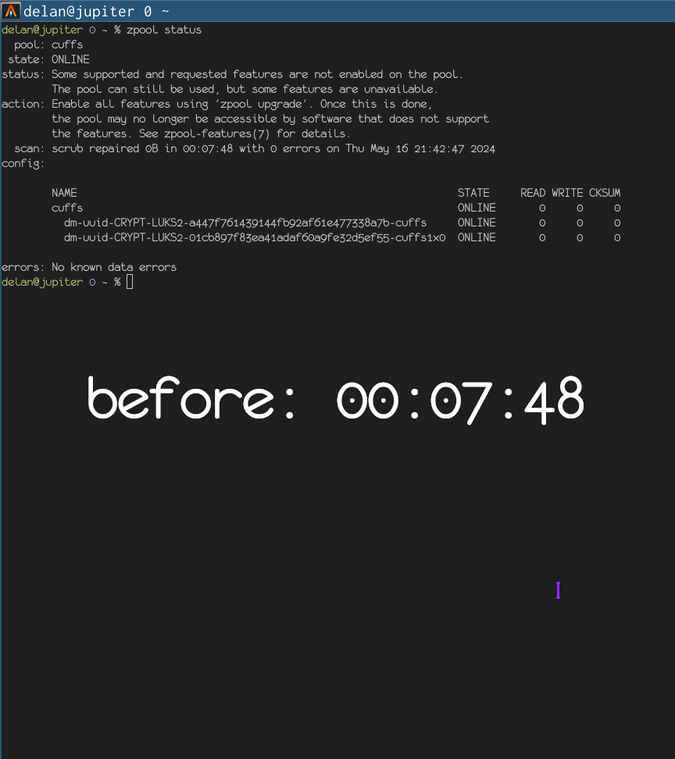

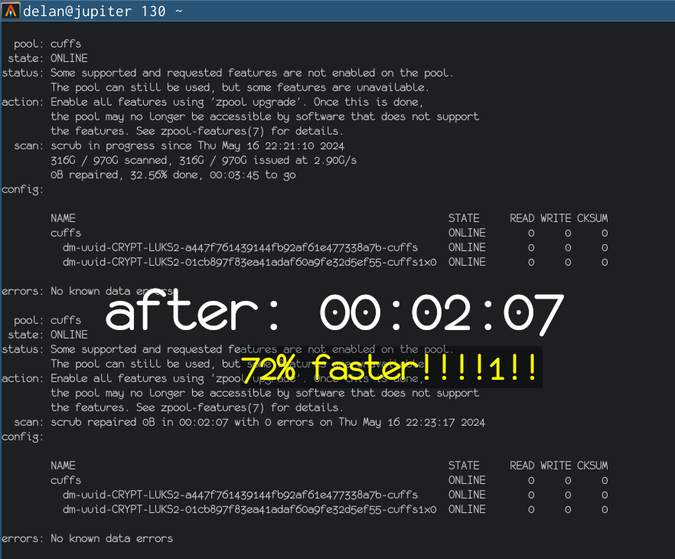

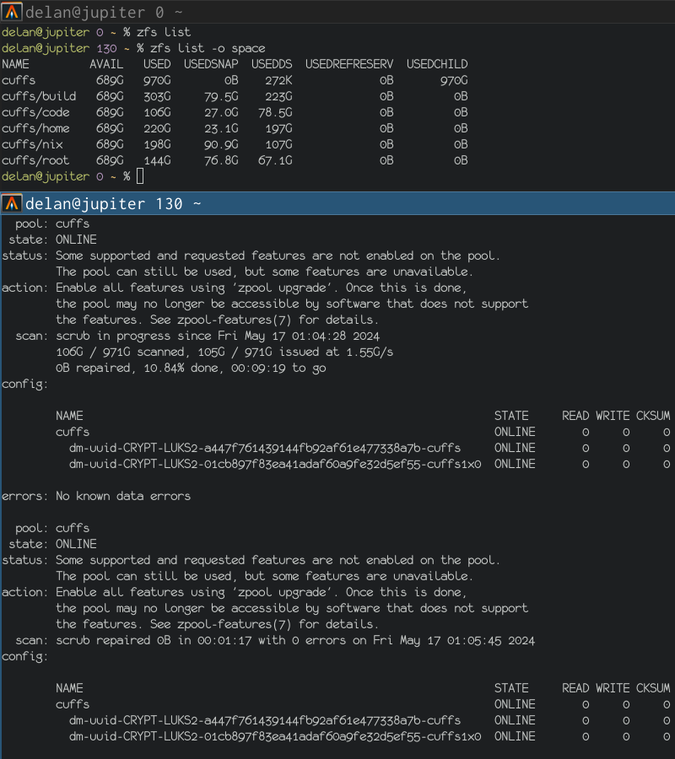

waow this one small trick will make your zpool scrub faster!!

we’ve done it. blazingly fast, zero-cost scrubbing

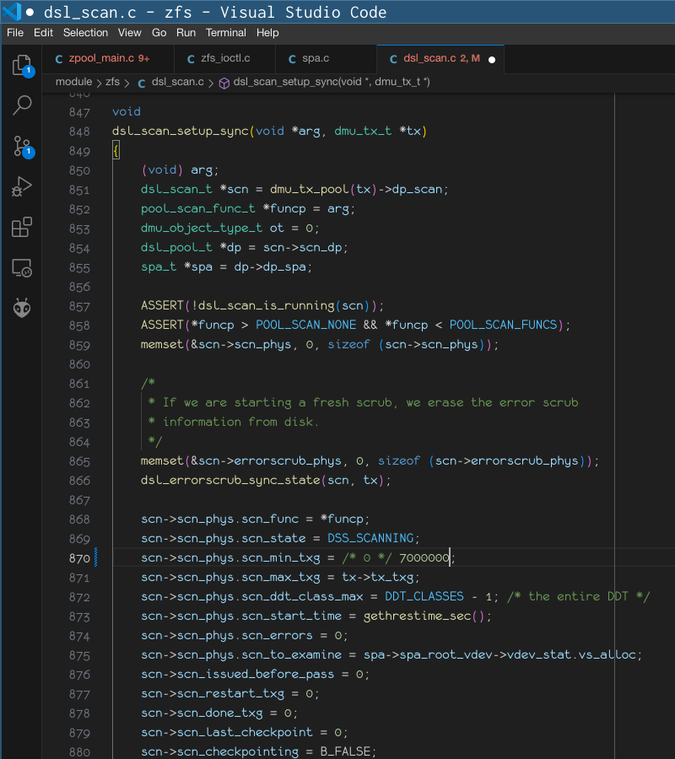

one small problem. i have no idea what range of txgs i want to scrub

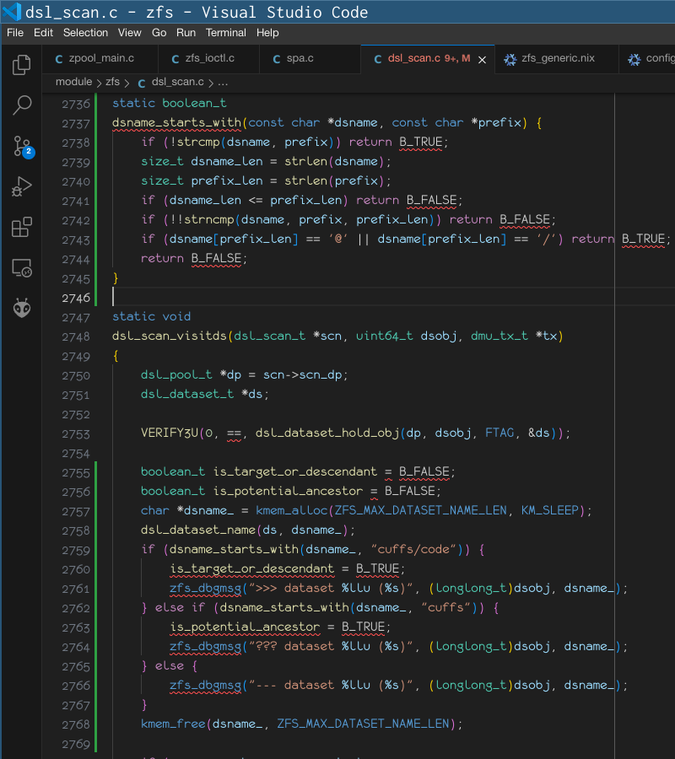

friendship ended with world’s most scuffed reimplementation of openzfs/zfs#15250. now world’s most scuffed reimplementation of openzfs/zfs#7257 is my best friend